In our case, y is GPA and there are 2 explanatory variables – SAT and Random 1,2,3. The adjusted R-squared is always smaller than the R-squared, as it penalizes excessive use of variables. In other words, SAT scores explain 41% of the variability of the college grades for our sample. Unfortunately, regressions explaining the entire variability are rare.

Combining R-squared with Other Metrics

To help navigate this confusing landscape, this post provides an accessible narrative primer to some basic properties of R² from a predictive modeling perspective, highlighting and dispelling common confusions and misconceptions about this metric. With this, I hope to help the reader to converge on a unified intuition of what R² truly captures as a measure of fit in predictive modeling and machine learning, and to highlight some of this metric’s strengths and limitations. Aiming for a broad audience which includes Stats 101 students and predictive modellers alike, I will keep the language simple and ground my arguments into concrete visualizations. The regression model on the left accounts for 38.0% of the variance while the one on the right accounts for 87.4%. The more variance that is accounted for by the regression model the closer the data points will fall to the fitted regression line. Theoretically, if a model could explain 100% of the variance, the fitted values would always equal the observed values and, therefore, all the data points would fall on the fitted regression line.

ArE LOW R-SQUARED VALUES INHERENTLY BAD?

Iliya started teaching at university, helping other students learn statistics and econometrics. Inspired by his first happy students, he co-founded 365 Data Science to continue spreading knowledge. He authored several of the program’s online courses in mathematics, statistics, machine learning, and deep learning. Suppose you are searching for an index fund that will track a specific index as closely as possible. In that scenario, you would want the fund’s R-squared value to be as high as possible since its goal is to match—rather than trail—the index. On the other hand, if you are looking for actively managed funds, then a high R-squared value might be seen as a bad sign, indicating that the funds’ managers are not adding sufficient value relative to their benchmarks.

- A value of 1 implies that all the variability in the dependent variable is explained by the independent variables, while a value of 0 suggests that the independent variables do not explain any of the variability.

- Due to clinical medicine’s genetic, environmental, and behavioral factors, high R2 values may not be realistic or expected in this context.

- Arguably this is a better model, becauseit separates out the real growth in sales from the inflationary growth, andalso because the errors have a more consistent variance over time.

Don’t conclude a model is “good” based on the R-squared

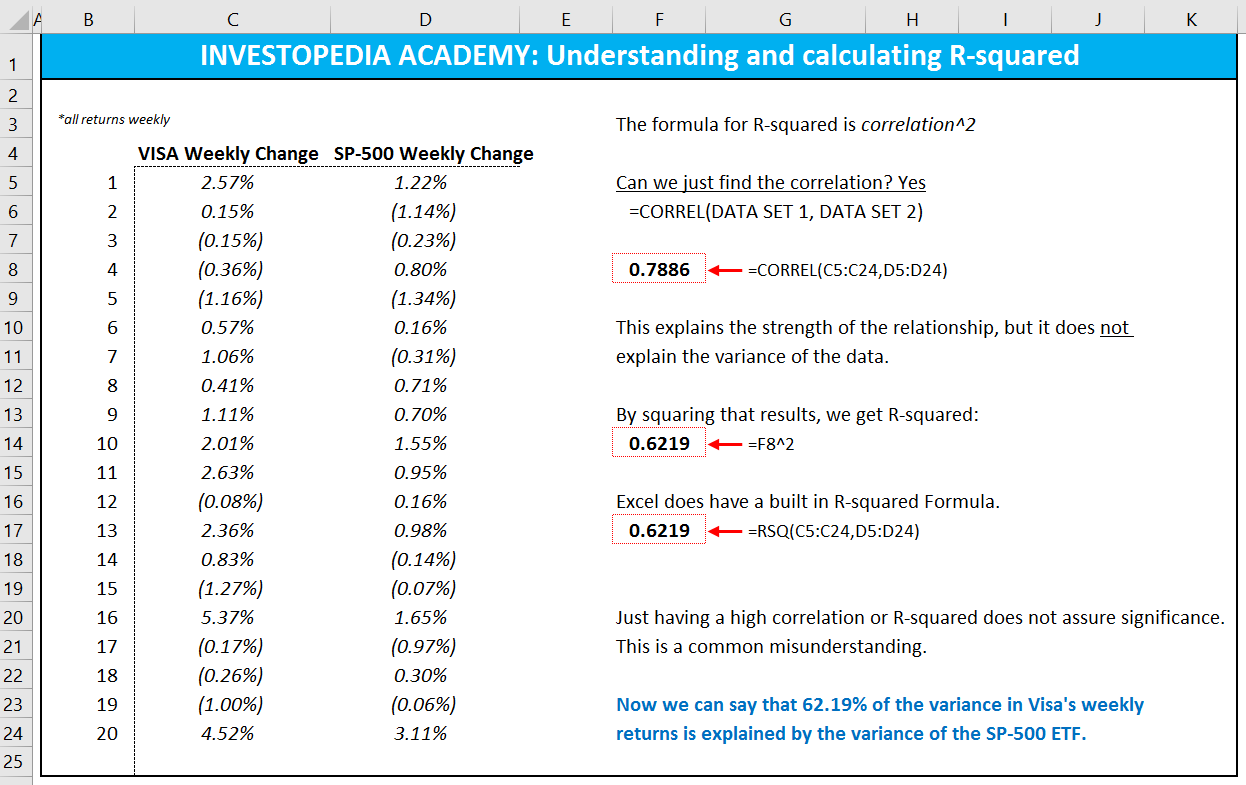

An R-squared of zero means our regression line explains none of the variability of the data. The R-squared is an intuitive and how do you interpret r squared practical tool, when in the right hands. It is equal to variability explained by the regression, divided by total variability.

This may involve exploring higher-order terms, interactions, or transforming variables in different ways to better capture the hidden relationships between data points. In some cases, you’ll have to have strong domain knowledge to get able to get this type of insight outside of the model. Importantly, what this suggests, is that while R² can be a tempting way to evaluate your model in a scale-independent fashion, and while it might makes sense to use it as a comparative metric, it is a far from transparent metric.

Why, then, is there such a big difference between the previous data and this data? The model is mistaking sample-specific noise in the training data for signal and modeling that — which is not at all an uncommon scenario. As a result, models’ predictions on new data samples will be poor. The figure below displays three models that make predictions for y based on values of x for different, randomly sampled subsets of this data. These models are not made-up models, as we will see in a moment, but let’s ignore this right now. However, similar biases can occur when your linear model is missing important predictors, polynomial terms, and interaction terms.

However, in social sciences, such as economics, finance, and psychology the situation is different. There, an R-squared of 0.2, or 20% of the variability explained by the model, would be fantastic. An R-squared of 1 would mean our model explains the entire variability of the data. You can also improve r-squared by refining model specifications and considering nonlinear relationships between variables.

When we fit linear regression models we often calculate the R-squared value of the model. The sample variance is a measure of the variability of the residuals, that is, of the part of the variability of the outputs that we are not able to explain with the regression model. Improving R-squared often requires a nuanced approach to model optimization. One potential strategy involves careful consideration of feature selection and engineering. By identifying and including only the most relevant predictors in your model, you can increase the likelihood of explaining relationships.

The F-test of overall significance determines whether this relationship is statistically significant. 30 studies spanning literature across various disciplines within clinical medicine were evaluated and synthesized to provide a contextualized, nuanced approach to interpreting the R2 in medical literature. For example, in driver analysis, models often have R-Squared values of around 0.20 to 0.40.

Caution is advised, whereas thorough logic and diligence are mandatory. As you can see from the picture above, we have data about the SAT and GPA results of students. We’ve generated a variable that assigns 1, 2, or 3, randomly to each student. But since it is far away from 90%, we may conclude we are missing some important information. Variables such as gender, income, and marital status could help us understand the full picture a little better. The SAT score is one of the better determinants of intellectual capacity and capability.

This would at leasteliminate the inflationary component of growth, which hopefully will make thevariance of the errors more consistent over time. Here is a time series plot showing autosales and personal income after they have been deflated by dividing them by theU.S. All-product consumer price index (CPI) at each point in time, with the CPInormalized to a value of 1.0 in February 1996 (the last row of the data). This does indeed flatten out the trendsomewhat, and it also brings out some fine detail in the month-to-monthvariations that was not so apparent on the original plot. In particular, we begin to see somesmall bumps and wiggles in the income data that roughly line up with largerbumps and wiggles in the auto sales data.